The name robots.txt sounds a little out there, especially when you’re new to SEO. Luckily, it sounds way weirder than it actually is. Website owners like you use the robots.txt file to give web robots instructions about their site. More specifically, it tells them which parts of your site you don’t want to be accessed by search engine creepy crawlers.

The first thing a search engine spider looks at when it is visiting a page is the robots.txt file.

- Why is the robots.txt file important?

- What counts as improper usage of the robots file?

- What does the robots.txt task look like on marketgoo?

- The Robots.txt file on Weebly sites

- The Robots.txt file on Wix sites

- The Robots.txt file on Squarespace sites

- The Robots.txt file on WordPress sites

- The Robots.txt file on Shopify sites

- Best Practices

- I just want to know if my site has a robots.txt file!!

Why is the robots.txt file important?

It is usually used to block search engines like Google from ‘seeing’ certain pages on your website – either because you don’t want your server to be overwhelmed by Google’s crawling, or have it crawling unimportant or duplicated pages on your site.

You might be thinking that it is also a good way to hide pages or information you’d rather be kept confidential and you don’t want to appear on Google. This is not what the robots.txt file is for, as these pages you want to hide may easily appear by circumventing the robots.txt instructions, if for instance another page on your site links back to the page you don’t want to appear.

While it is important to have this file, your site will still function without it and will still usually be crawled and indexed. An important reason it’s relevant to your site’s SEO because improper usage can affect your site’s ranking.

- An empty robots.txt file

- Using the wrong syntax

- Your robots.txt is in conflict with your sitemap.xml file (your robots.txt file contradicts your sitemap – if something is in your sitemap, it should not be blocked by your robots file).

- Using it to block private or sensitive pages instead of password protecting them

- Accidentally disallowing everything

- Your robots.txt file is over the 500 kb limit

- Not saving your robots file in the root directory

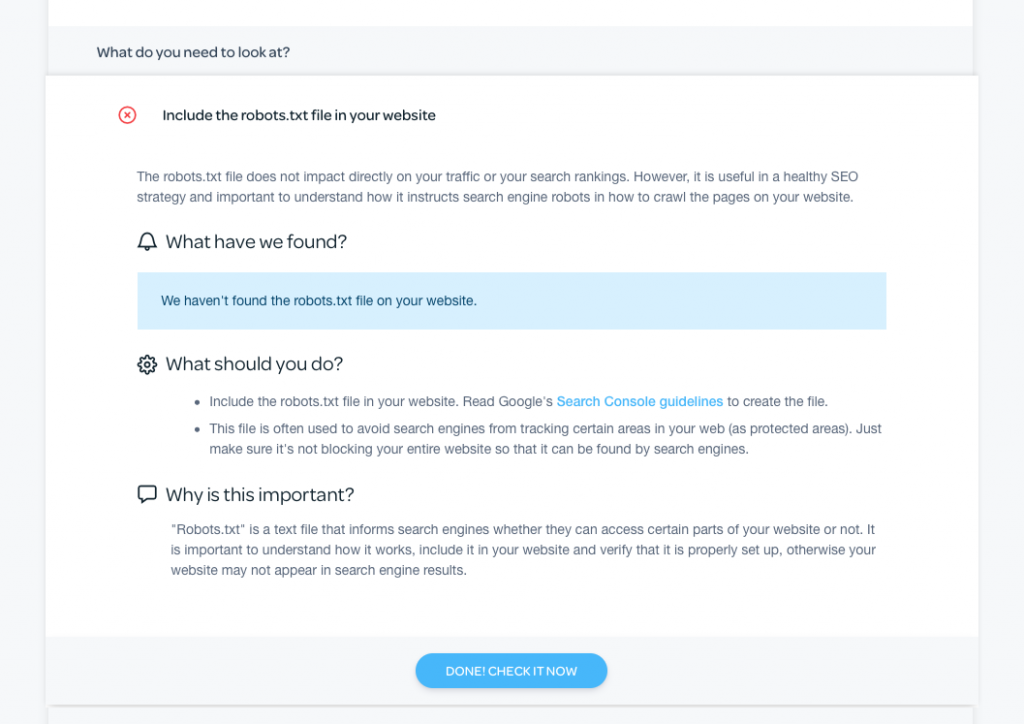

What does the task look like on marketgoo?

Within marketgoo, the task falls within the “Review Your Site” category. The task is simple, because if we detect a robots.txt file on your site, we will just make sure you know what it’s for and that it should be properly set up.

robots.txt on Weebly

If you’re on Weebly, your website automatically includes a robots.txt file that you can use to control search engine indexing for specific pages or for your entire site. You can view your robots file by going to www.yourdomain.com/robots.txt or yourdomain.weebly.com/robots.txt (using your website name instead of ‘yourdomain’).

The default setting is to allow search engines to index your entire site. If you want to prevent your entire website from being indexed by search engines, do the following:

- Go to the Settings tab in the editor and click on the SEO section

- Scroll down to the “Hide site from search engines” toggle

- Switch it to the On position

- Re-publish your site

If you only want to protect some of your pages from being indexed, do the following:

- Go to the SEO Settings menu

- Check that the “Hide site from search engines” toggle is set to Off.

- Go to the Pages tab and click on the page you want to hide

- Click on the SEO Settings button

- Click the checkbox to hide the page from search engines

- Click on the back arrow at the top to save your changes

You can change this as many times as you want, but remember that search engines take a while to figure it out and reflect it in their results.

There are some things that have been blocked and you can’t change on Weebly – like the directory where uploaded files for Digital Products are stored. These will not have any negative effect on your site or its search engine ranking.

Note: Google Search Console may give you a warning about ‘severe health issues’ regarding your Weebly site’s robots file. This is related to the blocked files described above, so don’t worry.

Square Websites

Please note that the ability to manually edit the robots.txt file isn’t available with Square Online stores at the moment.

If you want to hide a page from search engines: go to the site editor and open up the page you want to hide.

- Select the gear icon in the upper left and select View page settings.

- In the popup window, look for the SEO section and change Search Visibility to “Hidden from search engine results”.

- Complete and click on “Save”.

robots.txt on Wix

If you are on Wix, you should know that Wix automatically generates a robots file for every site created using its platform. You can view this file by adding ‘/robots.txt’ to your root domain (www.domain.com/robots.txt) (replacing domain.com with your actual domain name). If you see what’s in your robots.txt file, you will realise that there may be certain instructions already to prevent crawling of areas that do not contribute to your site’s SEO.

It is possible to edit the robots.txt file of your Wix site, but as Wix notes in their instructions, this is an advanced feature and you should proceed with caution.

To edit it:

- Go to your site’s dashboard.

- Click on Marketing & SEO.

- Click SEO Tools.

- Click Robots.txt File Editor.

- Click View File.

- Add your robots.txt file info by writing the directives in the box below the text “This is your current file:”

Remember to save changes, and to read Wix’s own Support documentation regarding editing your robots file.

If you don’t want a specific page of your site to appear in search engine results, you can hide it in the Page Editor section:

- Click the Menus & Pages from the top left bar of the Editor

- Click the page you want to hide

- Click the on the […] icon

- Click SEO (Google)

- Click the toggle next to Show this page from search results. This means that people cannot find your page when searching keywords and phrases in search engines.

- Remember to save and publish whenever you make any changes.

Note: If you’re using WIX ADI to build your site, there are slightly different instructions for you.

If you choose to password protect a page, this too prevents search engines from crawling and indexing that page. This means that password protected pages do not appear in search results.

Finally, follow these instructions to hide your entire site from search engines.

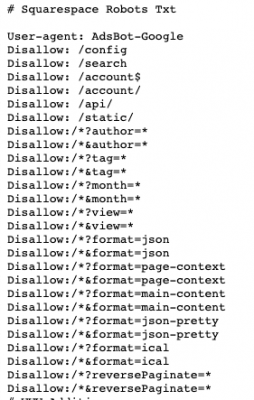

robots.txt on Squarespace

This is yet another platform that automatically generates a robots file for every site. Squarespace uses the robots.txt file to tell search engines that part of a site’s URL is restricted. They do this because these pages are only for internal use, or because they are URLs that are showing duplicate content (which can negatively affect your SEO). If you use a tool like Google Search Console, it’s going to show you an alert about these restrictions that Squarespace has set in the file.

Squarespace shows us as an example, that they ask Google not to crawl URLs like /config/, which is your Admin login page, or /api/ which is the Analytics tracking cookie. This makes sense.

Additionally, if you see plenty of disallows in your robots.txt file, this is also something normal for Squarespace to do in order to prevent duplicate content (which can appear in these pages):

To hide content on your Squarespace site, you can add a noindex tag through Code Injection or check the option Hide this page from search results in the SEO tab of your page settings. See a video of these instructions here.

If you are getting warnings from Google Search Console, for instance that a page is “indexed, though blocked by robots.txt”, you can check this handy guide about understanding what some of these errors mean and if you should ignore them or take action.

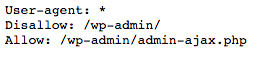

robots.txt on WordPress

If you’re on WordPress, your robots.txt file usually is in your site’s root folder. You can check by adding ‘/robots.txt’ to your root domain (www.domain.com/robots.txt – replacing domain.com with your actual domain name).

You will need to connect to your site using an FTP client or by using the cPanel file manager to view and edit the file. You can open it with a plain text editor like Notepad or TextEdit.

If you do not have a robots.txt file in your site’s root directory, then you can create one:

- Create a new text file on your computer and save it as robots.txt

- Upload it to your site’s root folder

This process can be a little cumbersome, and there is another option – you can instead create and edit the robots file with a plugin like Robots.txt Editor.

If you are using the Yoast or All in One SEO plugins, then you can generate and/or edit your robots.txt file from within the plugin.

You can use the robots.txt Tester in Google Search Console to make sure there are no errors and to validate which URLs from your site are blocked.

robots.txt on Shopify

- Shopify generates the robots.txt file automatically for your site.

- It’s not possible to edit the Robots.txt file for Shopify stores.

- If you want to hide certain pages from being indexed by Google, you need to customise the <head> section of your store’s theme.liquid layout file. To do so, follow these instructions.

- It’s normal to sometimes get a warning from within Google Search Console, telling you some items are blocked. Shopify blocks certain pages from being indexed, like your actual Cart page, or a filtered collection, which has + in the url. So if you see something like the following, it’s normal:

- Disallow: /collections/+

- Disallow: /collections/%2B

Robots.txt Best Practices

- If you want to prevent crawlers from accessing any private content on your website, then you have to password protect the area where they are stored. Robots.txt is a guide for web robots, so they are technically not under the obligation to follow your guidelines.

- Google Search Console offers a free Robots Tester, which scans and analyzes your file. You can test your file there to make sure it’s well set up. Log in, and under “Crawl” click on “robots.txt tester.” You can then enter the URL and you’ll see a green Allowed if everything looks good.

- You can use robots.txt to block files such as unimportant image or style files. But if the absence of these makes your page harder to understand for the search engine crawlers, then don’t block them, otherwise Google won’t entirely understand your site like you want it to.

- All bloggers, site owners and webmasters should be careful while editing the robots file; if you’re not sure, err on the side of caution!

If you want to get deep into the details, bookmark these guides from ContentKing and Search Engine Journal.

I just want to know whether my site has a robots.txt or not!

Just go to your browser and add “/robots.txt” to the end of your domain name! So, if your site is myapparelsite.com, what you type into the browser will be www.myapparelsite.com/robots.txt, and you’ll see something that looks like this (this example is for a WordPress site):

Robots.txt Tester

If you’re a marketgoo user, marketgoo will tell you automatically whether it detects it or not. You can further test it within Google Search Console.

If you’re not a marketgoo user (yet 😉 ), there are other tools you can use to see if you have a working robots.txt file, such as Ryte’s free test tool, or a site audit by SE Ranking which can detect robots.txt errors along with other issues that may impact your website’s performance.

Robots.txt Generator

Here is a free tool to generate a robots.txt file yourself.

Comments are closed.